Today there are two methods of scanning 3D objects and producing objects: depth mapping – relying on a depth sensor to understand depth – and photogrammetry – which relies on photographs from multiple angles to create a model. I wanted to develop an app that worked on an iPad to allow participants to scan models. I tried various apps that used depth scanners and photogrammetry and found that the iPad’s depth scanner on the back (back of what) struggled with small objects to the low resolution of the Lidar scanner. The front Face ID sensor also resulted in poor models due to often losing tracking often (likely as its API pipeline of the Face ID sensor was designed by Apple for faces). With photogrammetry, I found participants often struggle with developing noisy and inaccurate models, with Qlone by EyeCue Vision being the most accurate.

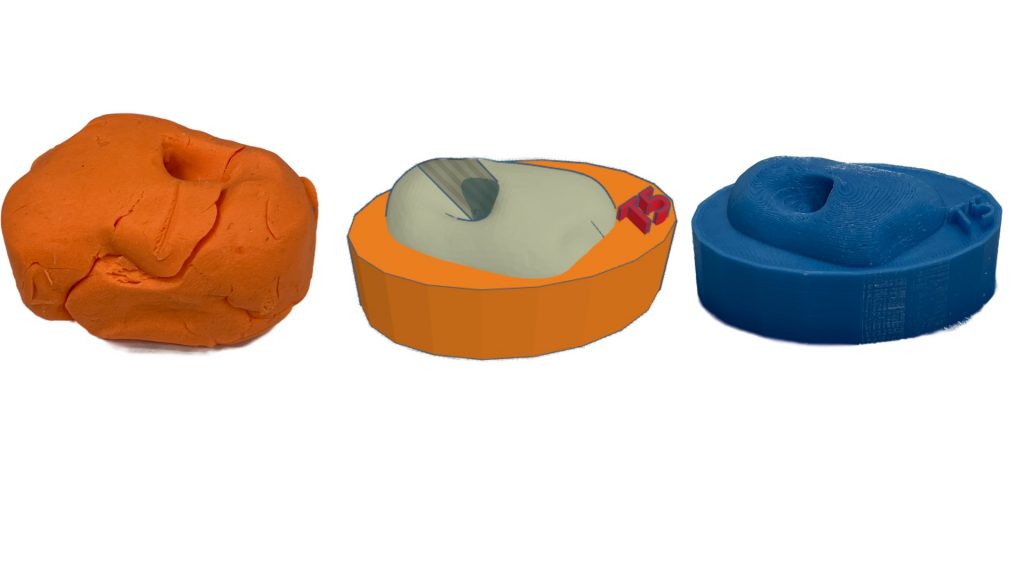

In a study of 12 participants, it became evident that they found using clay to be significantly more accessible to make models than purely CAD. The biggest challenge was with scanning as participants felt that the models were not accurate enough, holes were missing or had holes in where there should be none, or were generally incorrect.

From these findings, I developed PlayCAD – an app that uses an object detection model to identify primitive shapes, generating a model that people can edit on the iPad. These shapes can then be exported as an STL, modified or printed. The approach I’ve taken is guestimation – data from the camera is captured, and forms the size of the shapes. I capture both the X and Y axes and estimate the Z-axis to be X*Y/2. Currently, it can recognise primitive shapes and generate a model using the Euclid library.

As this is current work, I would like to run a study with novices to 3D modelling participants and a separate analysis with people who are proficient in 3D modelling to understand their thoughts on the app.